-

-

CacheBlend (Best Paper @ ACM EuroSys'25): Enabling 100% KV Cache Hit Rate in RAG

By LMCache Team -

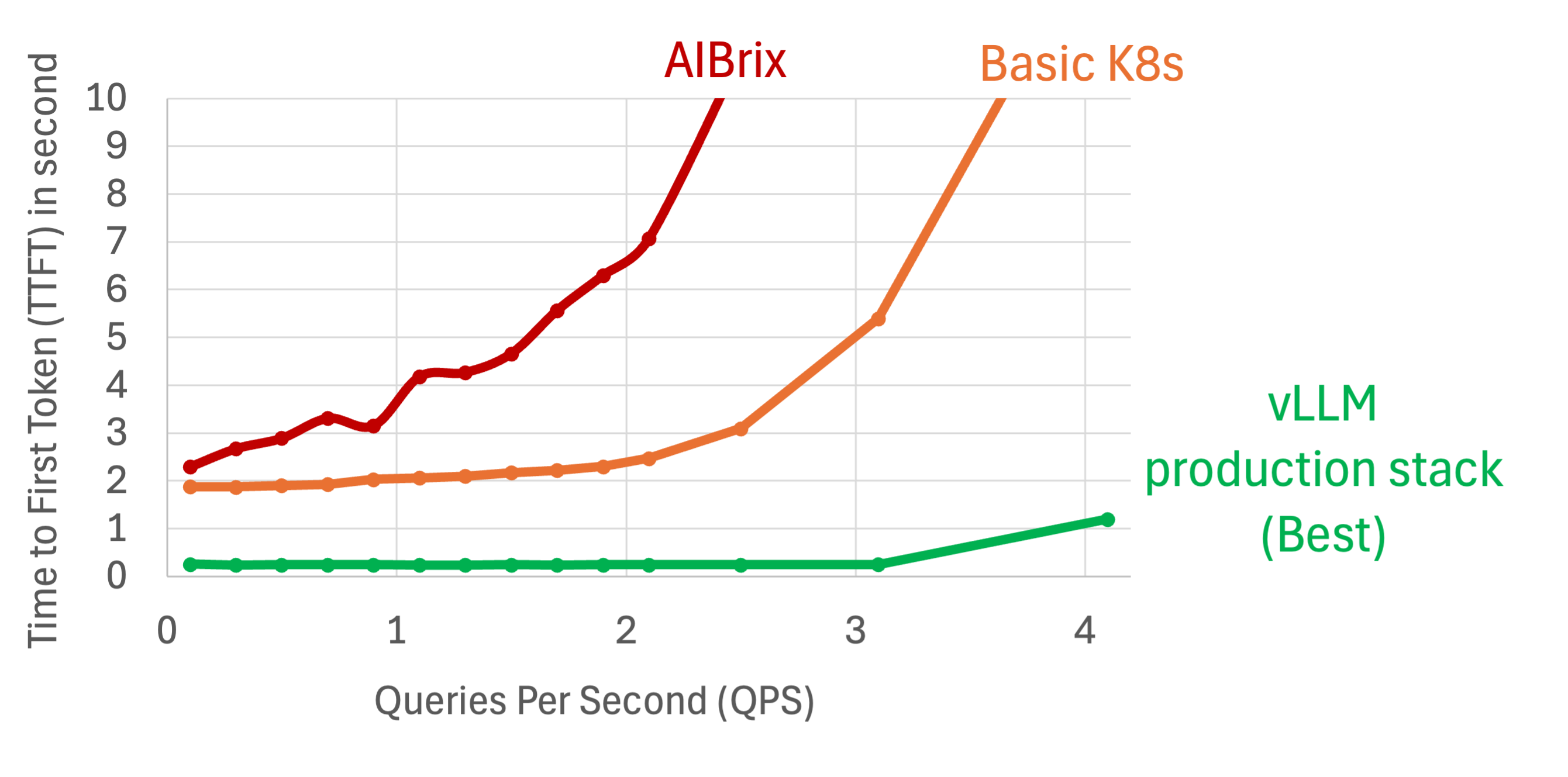

Open-Source LLM Inference Cluster Performing 10x FASTER than SOTA OSS Solution

By Production-Stack Team -

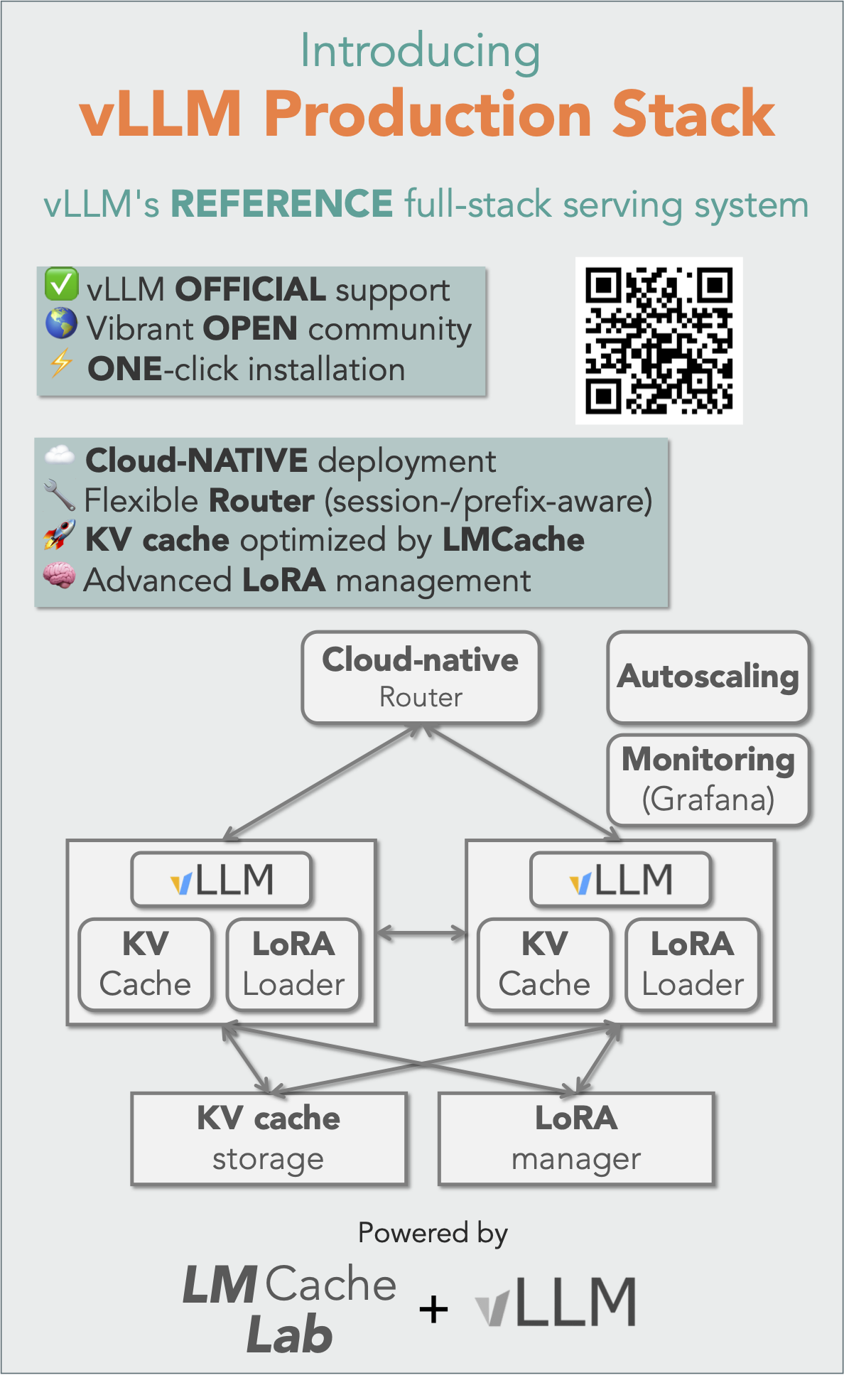

AGI Infra for All: vLLM Production Stack as the Standard for Scalable vLLM Serving

By LMCache Lab -

Open-Source LLM Inference Cluster Performing 10x FASTER than SOTA OSS Solution

By Production-Stack Team