-

-

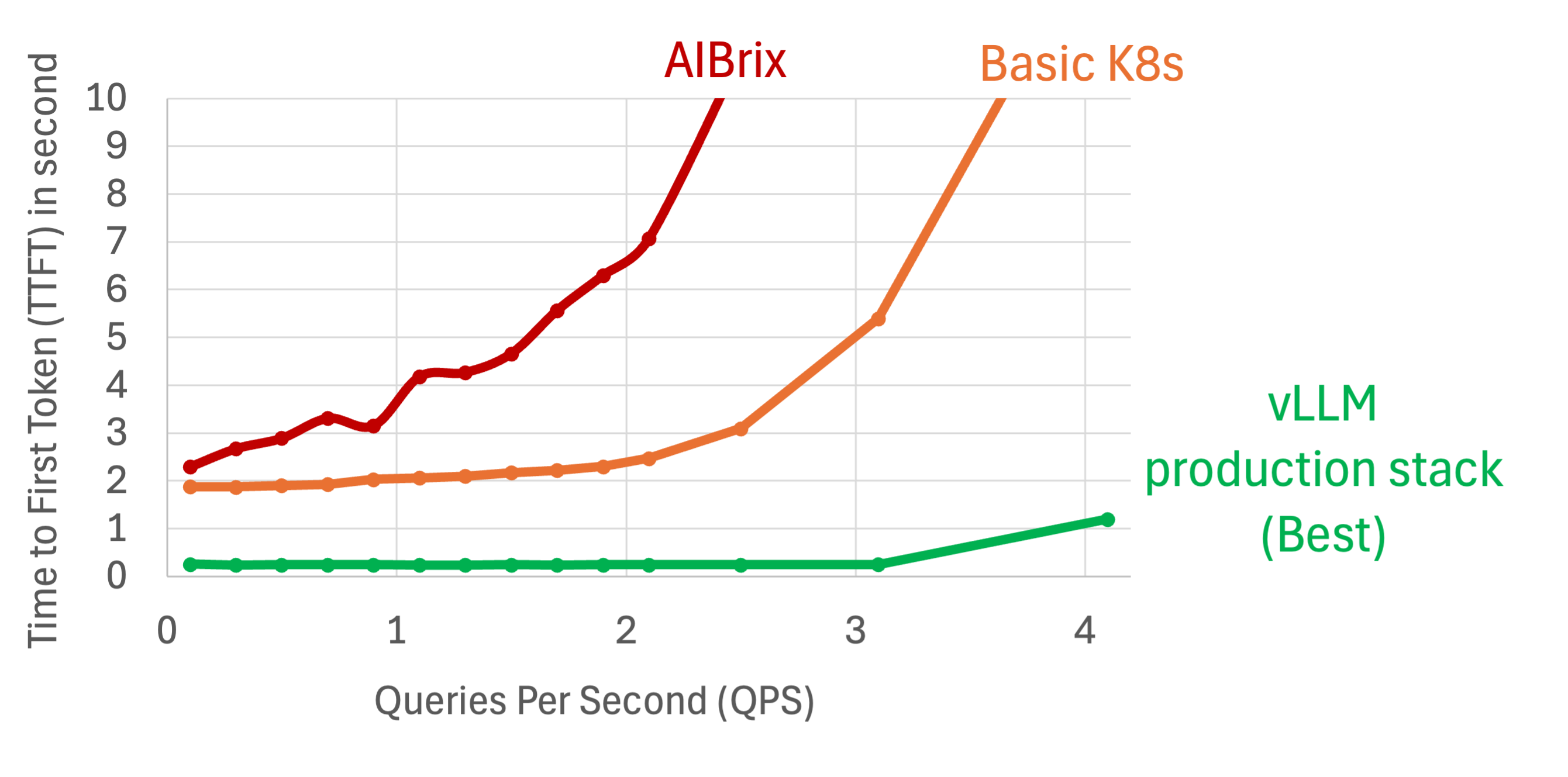

Open-Source LLM Inference Cluster Performing 10x FASTER than SOTA OSS Solution

By Production-Stack Team -

Deploying LLMs in Clusters #2: running “vLLM production-stack” on AWS EKS and GCP GKE

By LMCache Team -

Deploying LLMs in Clusters #1: running “vLLM production-stack” on a cloud VM

By LMCache Team -

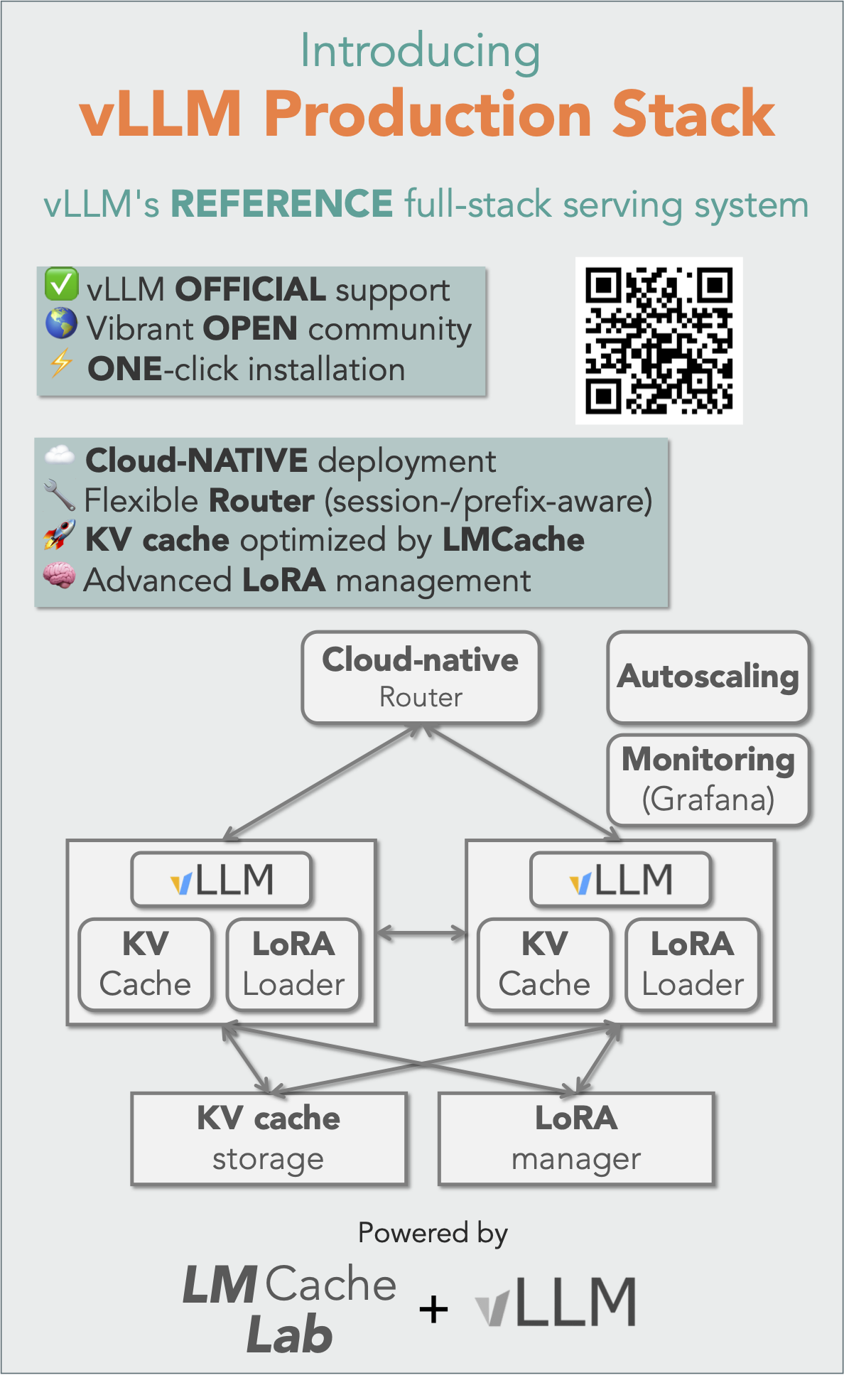

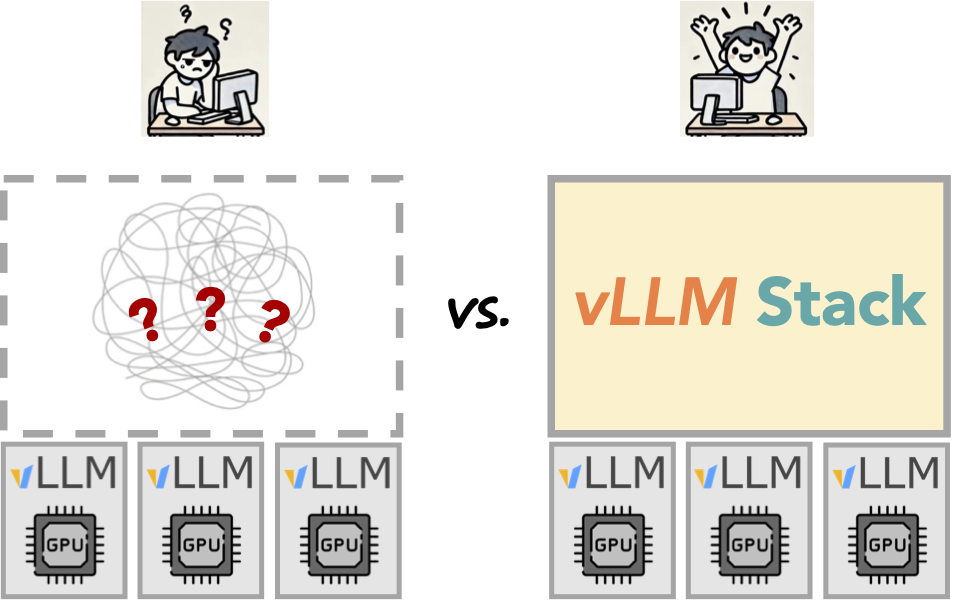

High Performance and Easy Deployment of vLLM in K8S with “vLLM production-stack”

By LMCache Team