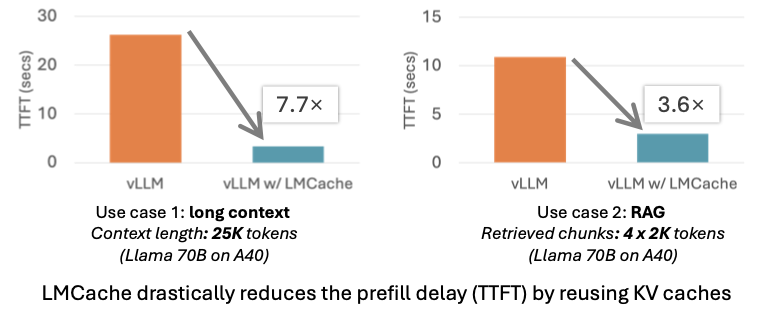

TL;DR: LMCache turboboosts vLLM with 7× faster access to 100x more KV caches, for both multi-turn conversation and RAG .

[💻 Source code] [📚 Paper1] [📚 Paper2] [🎬 3-minute introduction video]

LLMs are ubiquitous across industries, but when using them with long documents, it takes forever for the model even to spit out the first token.

Meet LMCache, a new open-source system developed in UChicago that generates the first token 3-10x faster than vLLM by efficiently managing long documents (and other sources of knowledge) for you.

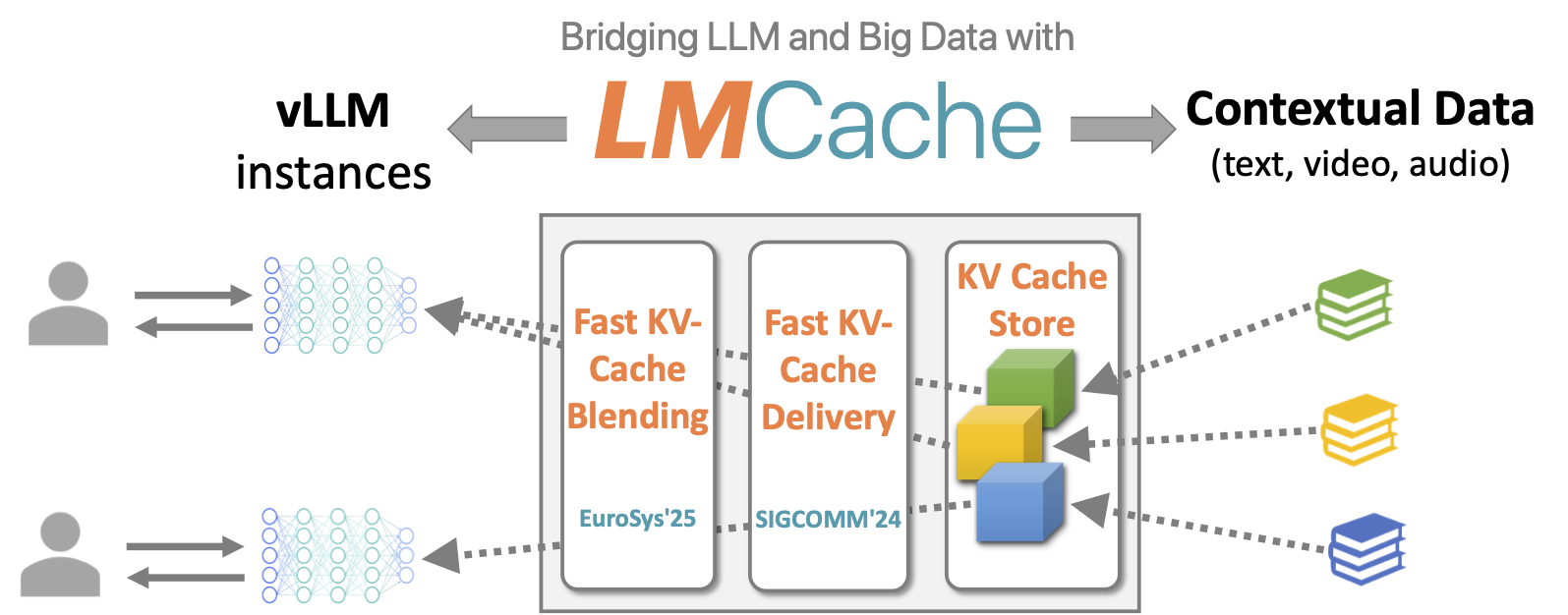

What is LMCache?

No matter how smart LLMs become, it is always slow and costly for them to read external texts, videos, etc. LMCache reduces this cost by letting LLMs read each text only once. Our insight is that most data are repetitively read, such as popular books, chat history, and news documents. (Recall the Pareto’s Rule: 20% of knowledge is used 80% of the time.)

By storing the KV caches (the LLM-usable representation) of all reusable texts, LMCache can reuse the KV caches of any reused text (not necessarily the prefix) in any serving engine instance. Developed at UChicago, this solution is already gaining significant interest from industry partners.

By combining LMCache with vLLM, LMCache reduces the “time to the first token” (TTFT) by 3-10x and saves the GPU cycle reduction in many LLM use cases, including multi-round QA and RAG.

For more details, please take a look at 🎬 3-minute introduction to LMCache.

The Secret Sauces

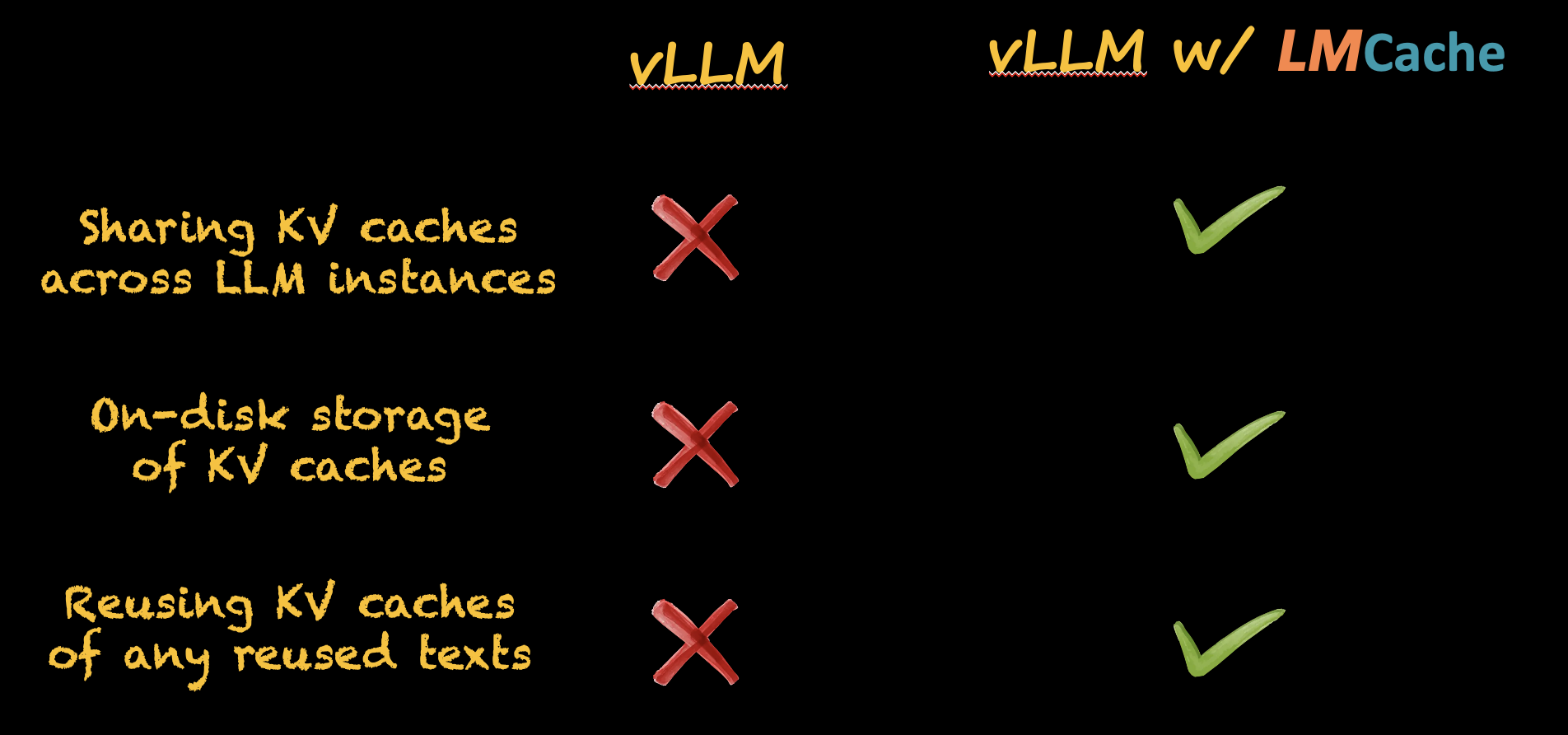

This table contrasts vLLM with vs. without LMCache:

At its core, LMCache incorporated two recent research projects from U Chicago.

-

CacheGen [SIGCOMM’24] efficiently encodes KV caches into bitstreams and stores them on disks. This allows unlimited amount of KV caches to be stored on cheap disks and be shared across multiple vLLM instances. It is in contrast with vLLM which stores KV caches only in one LLM instance’s internal GPU and CPU memory. This capability is pressingly needed in multiple-round chat apps that use many vLLM instances to serve many users.

-

CacheBlend [EuroSys’25] blends KV caches with minimum computation to preserve cross-attention. This allows multiple KV caches to be composed together to dynamically form a new KV cache. In contrast, vLLM only reuses the KV cache of the input prefix. This is particularly useful for RAG applications where multiple reused texts constitute the LLM input.

Get Started with LMCache

LMCache provides the integration to the latest vLLM (0.6.1.post2). To install LMCache, use the following command:

pip install lmcache lmcache_vllm

LMCache has the same interface as vLLM (both online serving and offline inference). To use the online serving, you can start an OpenAI API-compatible vLLM server with LMCache via:

lmcache_vllm serve lmsys/longchat-7b-16k --gpu-memory-utilization 0.8

To use vLLM’s offline inference with LMCache, just simply add lmcache_vllm before the import to vLLM components. For example

import lmcache_vllm.vllm as vllm

from lmcache_vllm.vllm import LLM

More detailed documentation will be available soon.

- Example: Sharing KV cache across multiple vLLM instances

LMCache supports sharing KV across different vLLM instances by the lmcache.server module. Here is a quick guide:

# Start lmcache server

lmcache_server localhost 65432

Then, start two vLLM instances with the LMCache config file

wget https://raw.githubusercontent.com/LMCache/LMCache/refs/heads/dev/examples/example.yaml

# start the first vLLM instance

LMCACHE_CONFIG_FILE=example.yaml CUDA_VISIBLE_DEVICES=0 lmcache_vllm serve lmsys/longchat-7b-16k --gpu-memory-utilization 0.8 --port 8000

# start the second vLLM instance

LMCACHE_CONFIG_FILE=example.yaml CUDA_VISIBLE_DEVICES=1 lmcache_vllm serve lmsys/longchat-7b-16k --gpu-memory-utilization 0.8 --port 8001