TL;DR

- vLLM boasts the largest open-source community in LLM serving, and “vLLM production-stack” offers a vLLM-based full inference stack with 10x better performance and Easy cluster management.

- Today, we will give a step-by-step demonstration on how to deploy “vLLM production-stack” across multiple nodes in AWS EKS and GCP.

- As per the poll result last week, next week’s topic will be manageing multiple models within a single Kubernetes cluster. Tell us what is next: [poll]

[Github Link] | [More Tutorials] | [Get In Touch]

AWS Tutorial (click here)

GKE Tutorial (click here)

The Context

vLLM has taken the open-source community by storm, with unparalleled hardware and model support plus an active ecosystem of top-notch contributors. But until now, vLLM has mostly focused on single-node deployments.

vLLM Production-stack is an open-source reference implementation of an inference stack built on top of vLLM, designed to run seamlessly on a cluster of GPU nodes. It adds four critical functionalities that complement vLLM’s native strengths.

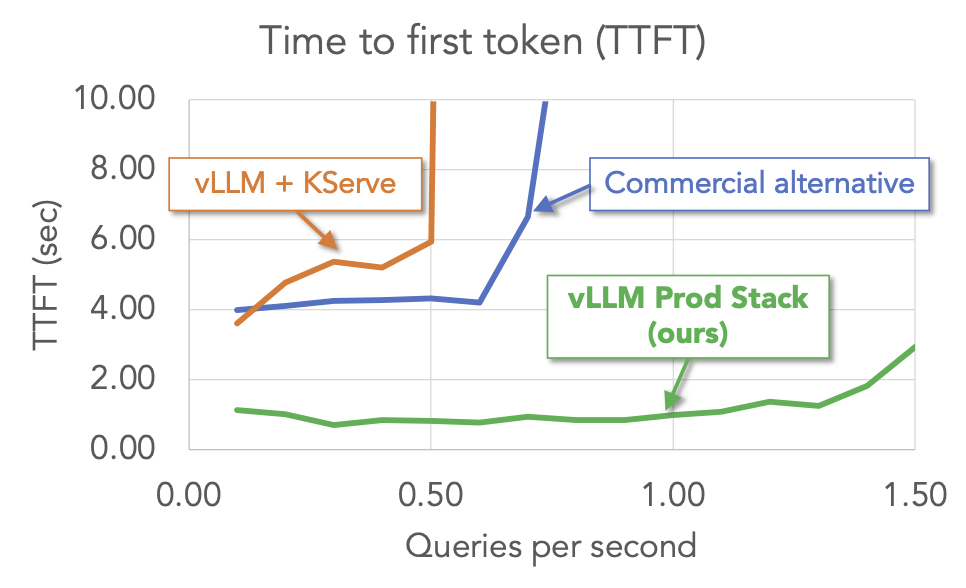

vLLM production-stack offers superior performance than other LLM serving solutions by achieving higher throughput through smart routing and KV cache sharing:

Deploying a vLLM Production-Stack on AWS, GCP, and Lambda Labs

Setting up a vLLM production-stack in the cloud requires deploying Kubernetes clusters with GPU support, configuring persistent volume for the cluster, and deploying the inference stack using Helm. Below is an overview of how to get started with minimal configurations for both AWS EKS and GCP GKE.

Deploying on AWS with EKS (Full AWS Tutorial)

To run the service, go into the “deployment_on_cloud/aws” folder and run:

bash entry_point.sh YOUR_AWSREGION EXAMPLE_YAML_PATH

Expected output:

NAME READY STATUS RESTARTS AGE

vllm-deployment-router-69b7f9748d-xrkvn 1/1 Running 0 75s

vllm-llama8b-deployment-vllm-696c998c6f-mvhg4 1/1 Running 0 75s

vllm-llama8b-deployment-vllm-696c298d9s-fdhw4 1/1 Running 0 75s

Clean up the service (not including VPC) with:

bash clean_up.sh production-stack YOUR_AWSREGION

The script will:

-

Set up an EKS cluster with GPU nodes.

-

Configure Amazon EFS for future use as persistent volume.

-

Configure Security groups for EFS and allow NFS traffic from EKS nodes.

-

Install and deploy the vLLM stack using Helm.

-

Validate the setup by checking the status of the pods.

Deploying on Google Cloud Platform (GCP) with GKE (Full GKE Tutorial)

To run the service, go to “deployment_on_cloud/gcp” and run:

sudo bash entry_point.sh YAML_FILE_PATH

Pods for the vllm deployment should transition to Ready and the Running state.

Expected output:

NAME READY STATUS RESTARTS AGE

vllm-deployment-router-69b7f9748d-xrkvn 1/1 Running 0 75s

vllm-opt125m-deployment-vllm-696c998c6f-mvhg4 1/1 Running 0 75s

Clean up the service with:

bash clean_up.sh production-stack

The script will:

-

Set up an GKE cluster with GPU nodes.

-

Install and deploy the vLLM stack using Helm.

Deploying on Lambda Lab VM (Lambda Lab Tutorial)

We released our Lambda Lab tutorial last week to set up a mini-kubernetes cluster on one VM. Checkout the video here!

Conclusion

We release this tutorial to enable running vLLM production-stack on AWS EKS and GCP GKE platform from scratch. More features including Terraform deployment and AWS autoscaling are on its way. The next blog will be serving multiple models within the same K8S cluster.

Stay tuned and tell us what we should do next! [Poll]

Join us to build a future where every application can harness the power of LLM inference—reliably, at scale, and without breaking a sweat. Happy deploying!

Contacts:

- Github: https://github.com/vllm-project/production-stack

- Chat with the Developers Interest Form

- vLLM slack

- LMCache slack