We’re thrilled to share that LMCache has officially crossed 5,000 GitHub stars! 🚀 This milestone is not just a number — it’s a strong signal that KV cache technology has become a first-class citizen in the LLM inference stack, and that our community is leading the way.

What is LMCache?

LMCache is the first open-source KV cache library designed to turbocharge LLM inference efficiency. It provides a production-ready foundation for manipulating, storing, and reusing KV cache at scale.

Key capabilities include:

KV cache CPU offloading → reuse across multiple queries, not tied to a single inference run.

Cross-engine sharing → unlocks interoperability across inference engines.

Inter-GPU KV transfer → enabling pipeline and PD disaggregation.

Experimental features → compression, blending (for RAG & agent workflows), and smarter cache mining to maximize hit rates.

LMCache focuses almost exclusively on real production deployments, where efficiency and scale matter most.

We’re also taking the next step by proposing LMCache to the PyTorch Foundation, ensuring the project continues to grow as a community-driven standard.

The Journey to 5K Stars

When LMCache launched in June 2024, KV cache was barely discussed outside research papers. Few predicted that KV cache would become central to improving throughput and reducing inference latency across the industry.

Fast forward one year, and the momentum is clear:

- KV cache has become a standard technique for scaling LLM inference.

- LMCache has been adopted in production by many companies to cut costs and latency.

- The project has become a dependency for other OSS efforts like Dynamo, llm-d, and vLLM production stack, further boosting adoption.

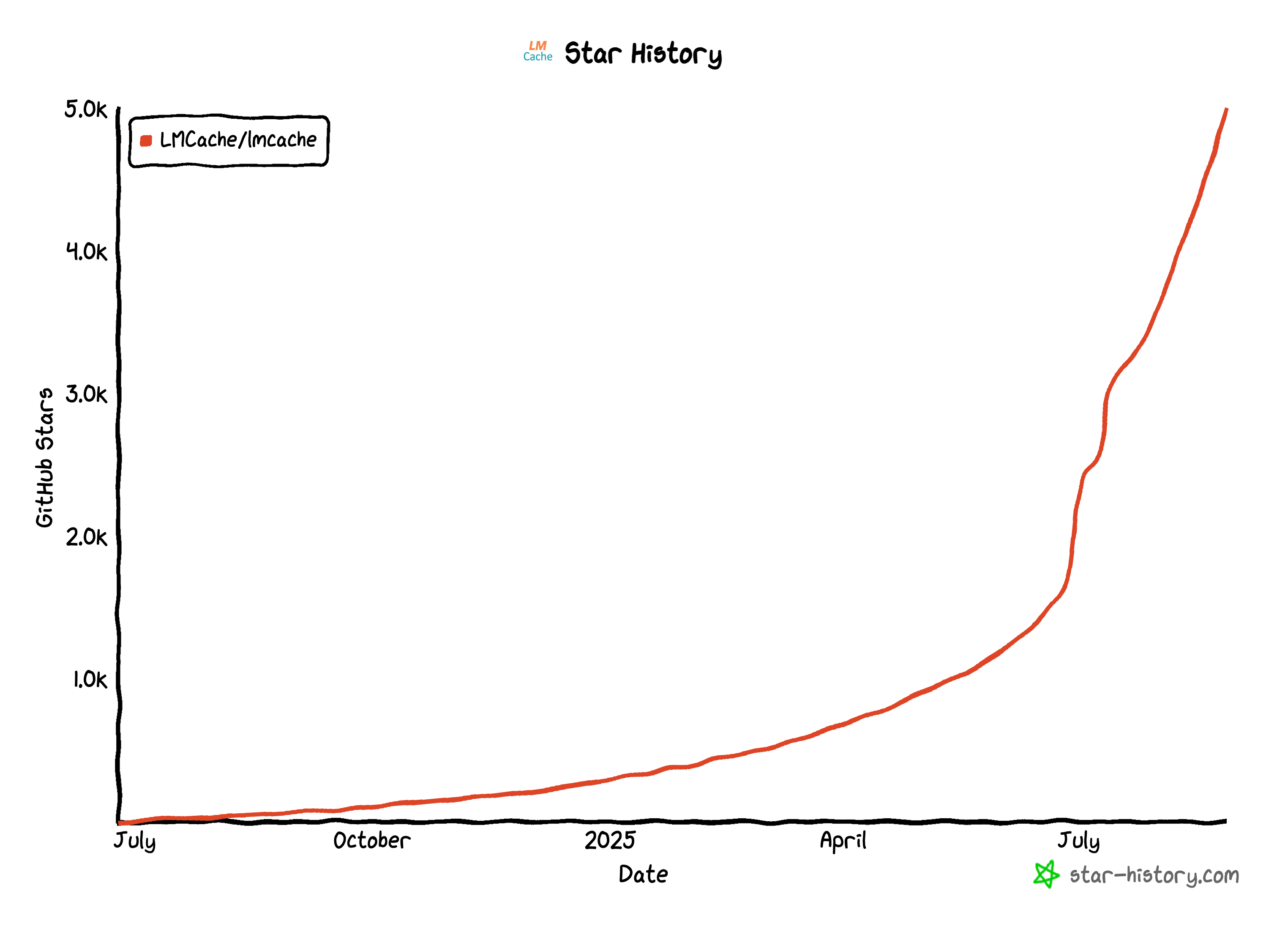

📈 You can view the star growth here: https://www.star-history.com/#LMCache/lmcache

LMCache’s GitHub star growth over time

LMCache’s GitHub star growth over time

Community Power

LMCache originated as a research prototype developed at the University of Chicago. Since then, it has evolved into a thriving open-source ecosystem thanks to contributions from a diverse global community, including engineers from IBM, NVIDIA, Red Hat, Microsoft, Redis, Cohere, AMD, Tencent, Weka, ByteDance, Pliops, and researchers from Stanford, MIT, UC Berkeley, CMU, …and many more.

This broad collaboration proves the project’s value across both academia and industry.

Looking Ahead

The 5K star milestone is just the beginning. We’re already working on:

- Expanding LMCache integrations across cloud and OSS inference stacks.

- Evolving KV cache persistence and disaggregation into standard building blocks for LLM infra.

- Continuing the process of donating LMCache into a larger open-source foundation to secure its long-term future.

How You Can Get Involved

We’d love for you to join us on the next phase of LMCache’s journey:

- ⭐ Star the project on GitHub

- 💬 Join our Slack community

- 📝 Fill out our interest form

- 📚 Read the documentation

- 🛠 Pick up a good first issue

💡 Final Note

5,000 stars in just over a year is a community achievement. It shows that we all bet on the right idea — that KV cache isn’t a side-optimization, but a core building block for the future of LLM infrastructure.

Thank you for building this future with us. Here’s to the next 5,000! 🚀